Although Kubernetes is a very complex system, installing it doesn’t have to be hard if you use existing tooling.

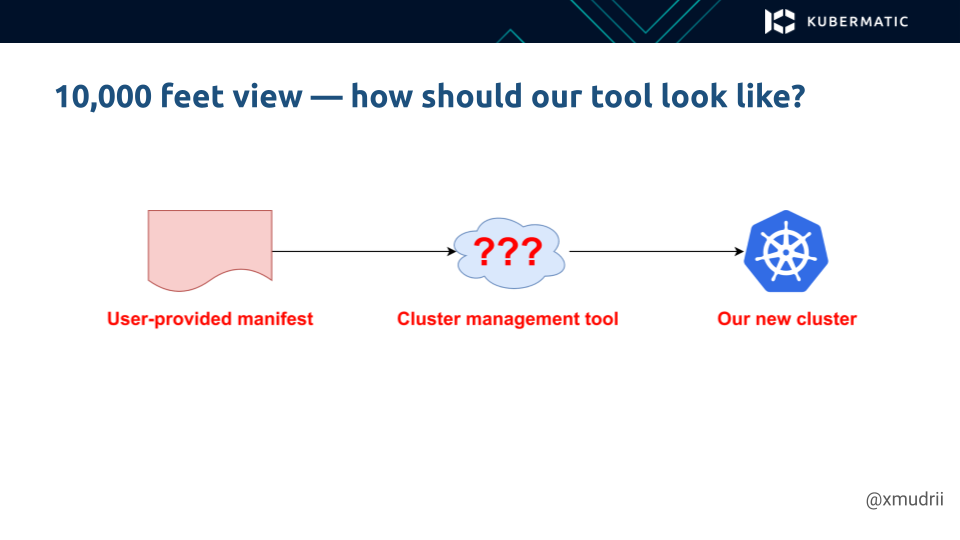

In this blog post, I want to share what we learned while creating KubeOne, a Kubernetes cluster lifecycle management tool. Let’s start with a 10,00 feet view on how a cluster lifecycling management should look like:

We have a user-provided manifest that defines what our cluster should look like. The manifest is given to the tool, which creates a new cluster, based on that manifest. This allows us to implement a declarative approach, which is widely used in the Kubernetes ecosystem and has a lot of advantages.

So, what should our tool do? The Kubernetes community is very large and has created a lot of tools to make managing clusters easier. We all know from experience that it’s always better to reuse as many tools as possible, so you don’t have to implement everything from scratch.

To answer the question, let’s have a look at Kubeadm, the official Kubernetes cluster management tool that creates production-ready, conformant clusters right out of the box.

Kubeadm does everything Kubernetes:

- It provisions the control plane and the worker nodes

- It can upgrade the cluster

- It can manage configuration and certificates

And so much more.

The best part is that it works anywhere, including cloud, on-prem and bare metal.

However, the Kubeadm’s philosophy only takes care of Kubernetes and Kubernetes cluster management. It’s supposed to be used as a building block in other tools and scripts.

So what about everything non-Kubernetes, like:

- Infrastructure and provisioning instances

- Configuring instances

- Installing packages like Container Runtime, Kubeadm or Kubelet

- Running Kubeadm itself

- Installing addons like CNI or metrics-server

For configuring instances, installing packages and running Kubeadm, we can use well-established tools and protocols like cloud-init or SSH.

For installing addons, we can use Kubectl, client-go, Helm…or whatever else works best for you.

Infrastructure and provisioning instances are a little more challenging. There are so many providers and setups, like cloud with AWS, GCP and so on; on-prem with vSphere and OpenStack, bare-metal, edge and the like.

Every provider has different features, structures and APIs, with endless possibilities in terms of architecture, making the challenge even greater.

Let’s look at three (3) ways of handling the infrastructure problem:

- Implement the infrastructure management tool.

- Use an external infrastructure management tool (e.g., Terraform).

- Use a combination of the previous two, i.e. the hybrid approach.

Managing the infrastructure ourselves assumes that the manifest includes the information about how the infrastructure should be created, as well as information about the Kubernetes cluster.

The tool creates the infrastructure and instances, and then installs and provisions Kubernetes on those instances. The problem here is that we lose the ability to create clusters anywhere. If our tools don’t support a certain provider or architecture, the user will not be able to use it.

However, we can easily address this by using an external infrastructure management tool, like Terraform, to create the infrastructure and instances, and provide the information about those instances, as well as how to access them, to our tool. The user-provided manifest will contain information specific to the desired Kubernetes cluster. Then the tool can use SSH to access these instances and install Kubernetes there.

The hybrid approach, which is also used by KubeOne, is the best option, because it offers a lot of flexibility.

The external tool is used to create the infrastructure and control plane instances. Then our tool provisions and manages the Kubernetes control plane. Lastly, we implement the Cluster API-based Kubernetes controller, used for managing worker nodes. This allows us to manage our worker nodes using the Kubernetes API and use features like auto-scaling and so more…

Where to Learn More

- KubeOne Demo: Deploy and Manage Your Cluster Everywhere With KubeOne

- Documentation: What Is KubeOne?

- Find KubeOne on Github