Anyone running an IT system for any amount of time can tell a story about a forgotten machine or three running somewhere that no one even knows about. From the server in the backroom that still runs Fortran to that EC2 instance from 2012 still on your monthly bill, the sprawl of IT systems is always increasing. It can quickly become an unmanageable mess full of snowflake servers both physical and virtual. System administrators have fought against this sprawl by managing servers like cattle rather than pets and implementing workflows that required approval from department heads and conformance to security policies, like only using pre-approved images or requesting it using Jira. From our experience working with customers, many of these “systems” scale through people with each addition to the sprawl consuming more management time. In addition, while many companies have many processes around managing their physical and virtualized environments, they face this same set of problems once again as they add Kubernetes to their tech stack.

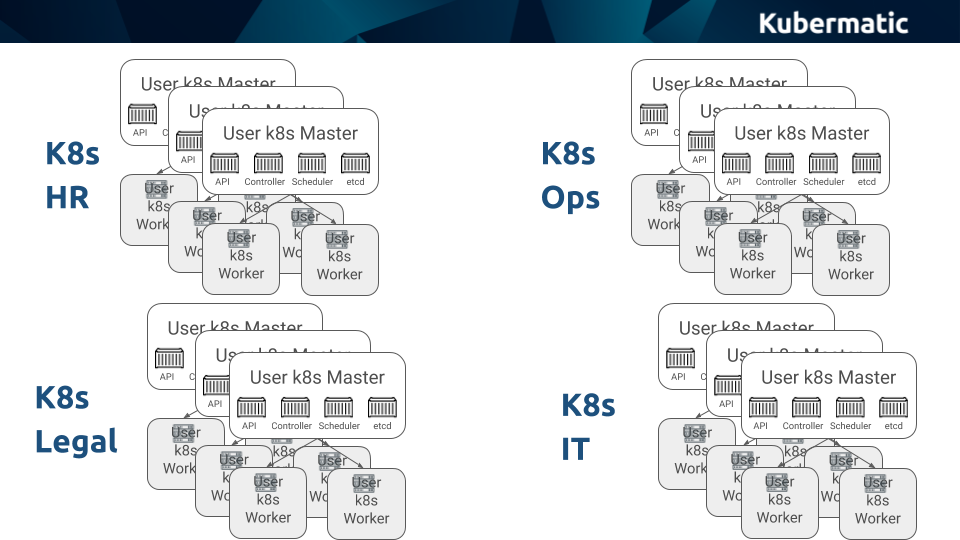

Many companies have moved past their PoC phase of Kubernetes and quickly are finding a new sprawl of Kubernetes clusters popping up. Different departments and business units are spinning up clusters in on-premises, private cloud, and public cloud environments. Each one can be running multiple clusters provisioned through a variety of tools such as Kubespray, Kops, or managed CaaS offerings such as Google Kubernetes Engine and Azure Kubernetes Service.

Kubernetes clusters have become the new deployment boundaries for applications. Though namespaces provide the required isolation and boundaries, customers prefer to isolate applications by running them on different clusters. Each business function has multiple clusters running across different environments — on-premises, private cloud, self-provisioned clusters in the public cloud, managed clusters in the public cloud. Just keeping track of these clusters, let alone actually managing them poses a huge challenge to the IT and DevOps teams.

To bring all the clusters to the desired and compliant state, a set of kubectl commands need to run on each cluster to ensure that they have consistent configuration, policies, quotas, and RBAC roles. Once again, doing this for each cluster is laborious and error-prone.

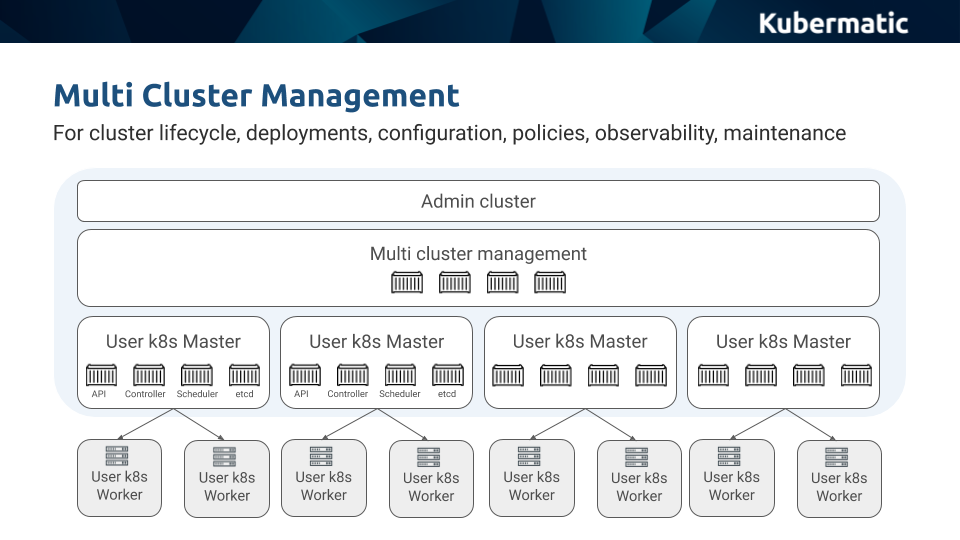

Kubernetes Multi Cluster Management — A Control Plane of the Control Planes

What enterprise IT needs is multi cluster management, like Kubermatic Kubernetes Platform, to act as an overarching control plane of all Kubernetes clusters launched within an organization. With multi cluster management, IT can keep track of each cluster and ensure that they comply with a set of predefined policies. However, this cannot be just a fire and forget activity because the vast majority of IT resources are spent in Day 2 operations. The meta control plane needs to also enforce strict rules that can detect a drift in the actual cluster configuration and bring them back to the desired state of the configuration.

We call it multi cluster management because it manages the control plane and worker nodes of every Kubernetes cluster. A command sent to the multi cluster manager is automatically applied to the control plane of each cluster to bring the clusters into the desired state whether that be creation, upgrading, undoing drift, or deletion.

Since the multi cluster manager has visibility into each cluster, it can collect and aggregate relevant metrics related to the infrastructure and application health. Thus it becomes THE single pane of glass for both configuration and observability.

Similar to how the Kubernetes controller maintains the desired state of deployments, statefulsets, jobs, and the daemonsets, the multi cluster manager ensures that every cluster maintains the desired state of the configuration throughout its whole life cycle.

For example, if a developer needs a new cluster for testing, they will be able to self provision a new cluster based on a company blueprint using quotas defined by the operations team who have automatic observability into the cluster. If an organization has a policy, like no containers can run as root, the multi cluster manager can detect when this policy gets deleted and automatically reapplies the configuration. This is similar to how the Kubernetes controller maintains the desired count of replicas of a deployment.

So, the multi cluster manager is to a Kubernetes cluster what the controller is to a deployment.

There is another commonality between the Kubernetes master and a multi cluster manager. When a workload is scheduled, the placement can be influenced through the combination of labels/selectors or node affinity. A nodeSelector or NodeAfffinity ensures that the workload lands on one of the nodes that matches the criteria. Similarly, the multi cluster manager can be instructed to push a deployment, configuration, or policy only to a subset of clusters based on their labels. This mechanism closely mimics the nodeSelector pattern of Kubernetes scheduling. Similar to labeling the nodes and using a selector at the deployment to target specific node(s), we label each cluster and use a selector from the multi cluster manager to filter the target clusters.

In summary, as the number of clusters grows within an organization, a multi cluster management solution is required to take control of cluster management and avoid the chaos of IT sprawl. At Kubermatic, we knew multi cluster management would be a problem for our customers from our first engagement with an on premise data center and production across two clouds. We designed, built, and open sourced Kubermatic Kubernetes Platform to help our customers centralize their multi cluster management and simplify their operations.

Kubermatic Kubernetes Platform - A Multi Cluster Manager

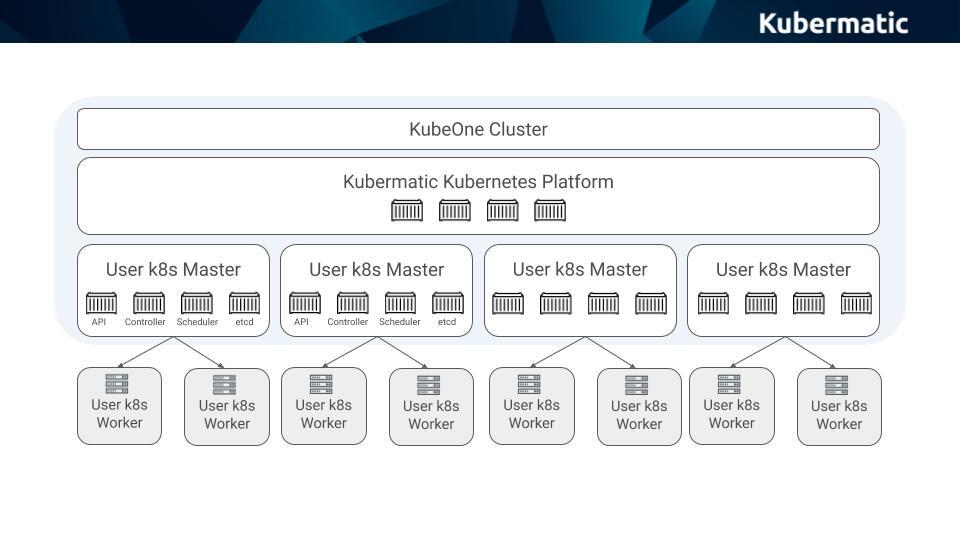

What is Kubermatic Kubernetes Platform? Simply put, it’s a Kubernetes based multi cluster management solution known for its Kubernetes in Kubernetes architecture. Though this definition is technically correct, it does not tell the whole story. Apart from being a multi cluster management solution, Kubermatic Kubernetes Platform automates many operational tasks that are critical to managing infrastructure and workloads in hybrid cloud, multi-cloud, and edge environments.

The core component of Kubermatic Kubernetes Platform is actually Kuberetes itself: Kubernetes Operators and CustomResourceDefinitions are used to automate cluster life cycle management and kubectl is the command line tool. Rather than adding an additional tool to learn, Kubermatic Kubernetes Platform allows operators to reuses their existing Kubernetes knowledge of CRDs and kubectl to control cluster state.

Kubermatic Kubernetes Platform — The Hybrid Cloud, Multi Cloud and Edge Control Plane

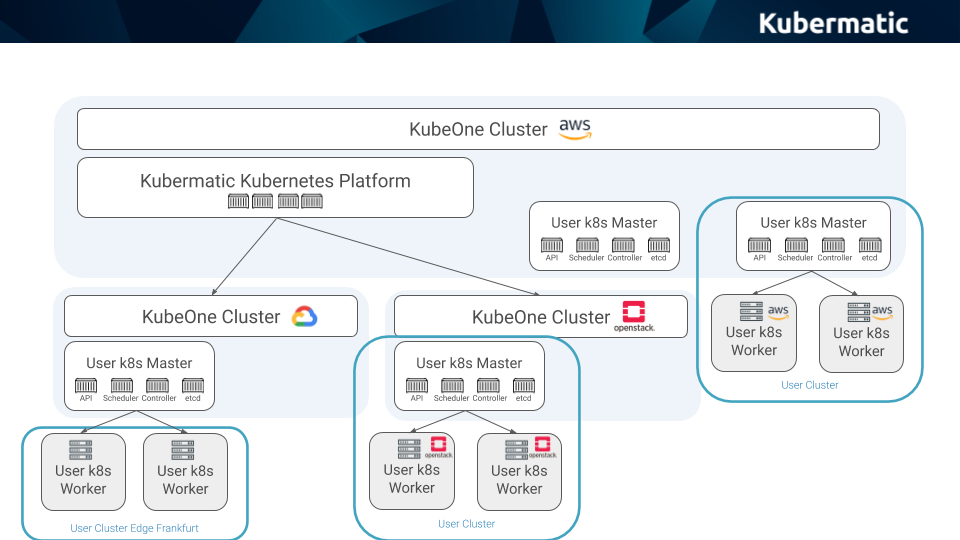

Kubermatic Kubernetes Platform is an open source software that can be run anywhere and used to manage Kubernetes clusters in a variety of environments including on-premises data center, AWS, Azure, GCP, and edge environments.

Kubermatic Kubernetes Platform first provisions an admin cluster using our open source tool KubeOne. Think of the admin cluster as the local control plane that handles the life cycle of managed clusters running on the same infrastructure. To provision additional user clusters, the user cluster control plane is created as a deployment of containers within a namespace of the admin cluster while the worker nodes are provisioned using machine-controller.

To provision clusters in additional data centers or infrastructure providers, seed clusters can be set up to provide localized control plane management. They function similarly to the admin cluster, but are under its control. Kubermatic Kubernetes Platform can launch highly available Kubernetes clusters that span multiple availability zones. To create and manage clusters, the underlying infrastructure provider is only used to create, update, and delete servers whether physical or virtual while Kubernetes Operators take care of the rest. Technically speaking, Kubermatic Kubernetes Platform can launch a managed cluster in any programmable infrastructure that supports running Kubernetes in high availability mode.

For customers that already have clusters running and would like to use other tooling for part of their infrastructure, Kubermatic Kubernetes Platform supports connecting external, unmanaged clusters to the control plane. The key difference between the two — managed vs unmanaged — is the life cycle management. While Kubermatic Kubernetes Platform can own everything from the creation and termination of managed clusters, it can only partially control the external, unmanaged clusters. Connecting external clusters allows you to have a single pane of glass over your whole Kubernetes infrastructure.

Key Components of Kubermatic Kubernetes Platform

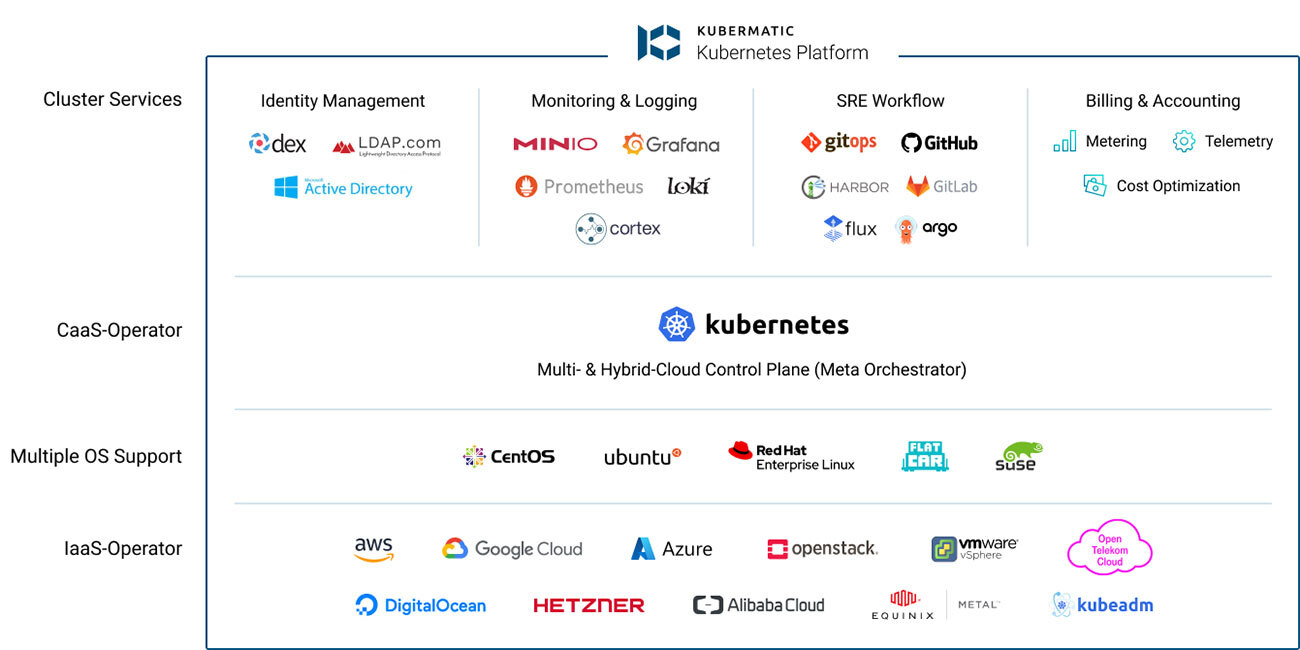

Apart from being a hybrid-cloud, multi-cloud, and edge multi cluster management solution for Kubernetes, Kubermatic Kubernetes Platform can manage the network policies, routing, security, cluster add ons, and configuration management of workloads deployed across clusters.

Let’s take a look at the key components of Kubermatic Kubernetes Platform:

- Kubermatic Kubernetes Platform Control Plane: This component is for multi cluster management. It’s responsible for managing the life cycle of managed clusters and the registration and un-registration of external, unmanaged clusters.

- Kubermatic Kubernetes Platform Virtualization: Based on the open source KubeVirt project, it allows you to run containerized and virtualized workloads side by side. In addition, it can be used to slice up large bare metal machines into smaller virtual machines to operate additional Kubernetes clusters.

- Kubermatic Kubernetes Platform Config Management: This component based on GitOps enables a centralized mechanism to push deployments, configuration, and policies to all the participating clusters — both managed and unmanaged. A centrally accessible Git repository acts as a single source of truth for all the clusters. A Kubermatic Kubernetes Platform Config Management agent running in each cluster will monitor the change of state in a cluster. When deviated from what’s defined in the Git, the agent automatically applies the configuration which will bring the cluster back to the desired state. This works for the cluster state, policies, and cluster addons.

- Coming soon - KubeCarrier Service Platform - An operator for operators that provides a catalog of applications and services that can be easily installed and maintained in every cluster.