In this blog post, we shall be looking at how to use the KubeOne project to deploy a 5G core PoC. 5G is the next iteration of mobile technology with an emphasis on using cloud native technologies of which Kubernetes is meant to play an important role. KubeOne is a production grade open source Kubernetes cluster life cycle management tool. AWS will be used for the cloud provider in this blog post.

The 5G core solution that will be used is free5gc (version 3.0.1) which is an open source software. Free5gc has some requirements in order to achieve a successful deployment, these are:

- A specific linux kernel (5.0.0-23-generic) is required for the GTP-U aspect (i.e. UPF). Further information about this and how to compile the 5gc kernel module can be found on the free5gc page.

- Free5gc requires static IP allocation for the service initialization and most of the CNI implementations use dynamic IP address allocation. To resolve this, an Open vSwitch based network controller by sdnvortex was used to create a second network interface within the Kubernetes pod. This implies that the AWS AMI image needs to have Open vSwitch pre-installed. A specific AMI image was created for this deployment and is available publicly. In addition to Open vSwitch, a bridge interface also needs to be created before scheduling the pods. The bridge interface will enable the additional network interfaces to be able to communicate with one another.

The sdnvortex controller is deployed as a daemonset but uses an init-container to create the second network interface.

Note: There are several ways to achieve multiple interfaces in a Kubernetes pod, the sdnvortex controller was selected for this write-up since it is fairly easy to set up.

- Because of the requirement for multiple network cards, a single worker node was used for this PoC. Using multiple worker nodes might increase the complexity way beyond that which is needed for a simple PoC, especially on public cloud environments. However, using multiple worker nodes should be easily achievable with on-prem or bare metal platforms like vSphere.

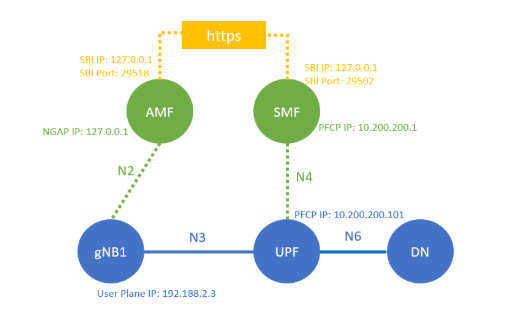

Below is the diagram (from the free5gc repo) for the 5Gc deployment:

The only difference between this diagram and the PoC is that instead of using the localhost addresses, a static IP is used with the second network card inside each pod. IP details for the second network are given below:

AMF - 192.168.2.2

SMF - 192.168.2.3

NRF - 192.168.2.5

AUSF - 192.168.2.4

NSSF - 192.168.2.6

UDM - 192.168.2.7

UDR - 192.168.2.8

UPF - 192.168.2.10

PCF - 192.168.2.9

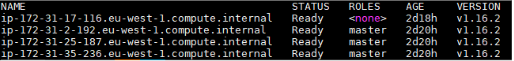

Details about installing KubeOne on AWS can be found in this blog post. A snapshot of the cluster status is given below:

After installing a KubeOne cluster with the specific AMI (5.0.0-23-generic kernel image and Open vSwitch), the Kubernetes manifests for the 5G components can be retrieved from Bitbucket.

Note: The N3IWF was not used in this post.

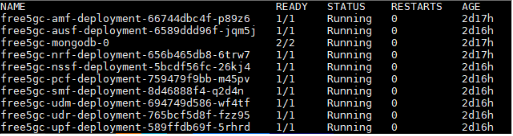

After applying the manifests, the status of the pods can be seen below:

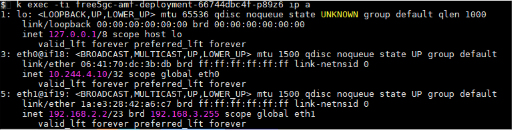

Let’s see the IP details for one of the pods.

We can see that a second interface, eth1 with an IP address of 192.168.2.2. has been allocated by the sdnvortex controller to the AMF pod.

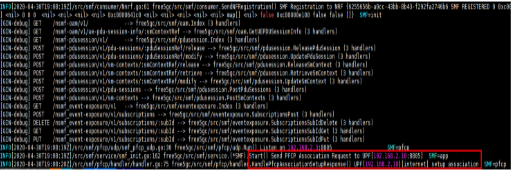

As of now, open source 5G SA emulators are not available to simulate the connectivity of a gNB/UE to the 5G core deployment. However, we can check the logs of the SMF pod to see that it was able to initiate a PFCP connection with the UPF:

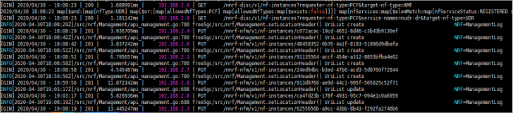

Sample logs from the NRF pod show communication between it and the remaining 5G core components over the service based interface:

From the logs above, we can see that the SBI communication is over HTTP using REST (i.e. the GET and PUT statements).

Summary

In this blog post, we deployed a 5G core to a Kubernetes cluster running using KubeOne on AWS and checked its connectivity. In a future post, we will show how to install and run a 5G core on vSphere.

Where to learn more

- Kubermatic KubeOne on Github: https://github.com/kubermatic/kubeone

- Free5gc on Github: https://github.com/free5gc/free5gc

- About Open v Switch: http://www.openvswitch.org/

- Sdnvortex Network Controller:https://www.github.com/linkernetworks/network-controller